In late December 2017, the Institute of Electrical and Electronics Engineers (IEEE) Standards Association adopted standard 802.3bs for 200 and 400 Gbps. Among other things, the standard cleared the way for 400GBASE-SR16, which called for 32 multimode fibers per MPO, delivering 16 25G NRZ lanes. A lot of ink was spilled over 400GBASE-SR16—yet, within a few years, 400GBASE-SR16 had died on the vine.

Turns out that, at that time, putting 32 fibers in a single connector may have been a bridge too far. So, what’s the lesson here? It might just be that, in the search for ever-faster throughput, practicality is every bit as important as speed.

High-speed migration is a relative term based upon the business model and purpose of the data center operator. Enterprise organizations are moving from duplex to parallel transmission at 10G to 40G, 25G to 100G and above to increase capacity. Currently, industry leaders are poised to migrate to 800G—and 1.6T after that. Network designers are working through their options, trying to ensure they have the right infrastructure to efficiently deliver higher-speed, lower-latency, cost-effective performance.

On the cabling side, there are a lot of installed 12-fiber links out there (and a few 24-fiber ones). Some facilities switched early on from 12-fiber to 8-fiber for application support, and, more recently, others have decided to leapfrog from the 8-fiber option—jumping directly to a 16-fiber-based infrastructure.

In this blog, we’ll take a look at some approaches data centers can take to handle the transition from their legacy fiber configurations to newer designs that will place them on a path to 1.6T and beyond, or to those next-generation applications, for their capacity needs. Before diving into solutions, a bit of context.

How we got here

To accelerate data rate development, duplex applications such as 10G, 25G and 50G were grouped into four-lane quad designs to provide reliable and steady migration to 40G, 100G and 200G. The configuration of choice was the 12-fiber MPO—the first MPO connector to be accepted in data centers. Yes, there was a lesser-used 24-fiber variation, but the 12-fiber was common, convenient, and a more frequently deployed multifiber interface found at the optical module on switches.

As data centers migrate to 8- or 16-fiber applications for improved performance, the 12- and 24-fiber configurations become less efficient. Breaking out switch capacity using 12- and 24-fiber trunks becomes more of a challenge. The numbers just don’t add up, leaving capacity stranded at the switch port or forcing the combination of multiple trunk cables into hydra or array cables to fully utilize the fibers at the equipment. Ironically, the calculations that made 12-fiber perfect for duplex applications under 400G suddenly made it much less attractive with parallel at 400G and above. Enter the 8-lane “octal” design: i.e., 8 receive and 8 transmit lanes.

What’s so great about 16-fiber connectivity?

Beginning with 400G, eight-lane octal technology and 16-fiber MPO breakouts became the most efficient multipair building block for trunk applications. Moving from quad-based deployments to octal configurations doubles the number of breakouts—enabling network managers to eliminate switch layers or maximize the fiber presentation and density at the face of a switch, while supporting line rate applications. Today’s applications are optimized for 16-fiber cabling. Supporting 400G and higher applications with 16-fiber technology allows data centers to deliver maximum switch capacity to switches or servers. By the way, 16-fiber groupings also fully support 8-, 4- or 2-fiber applications without compromise or waste.

This 16-fiber design—including matching transceivers, trunk/array cables and distribution modules—becomes the common building block enabling data centers to progress through 400G and beyond. Want to migrate to 800G using 100G lanes? A single MPO16 or two MPO8 connections from a common trunk in a single transceiver will soon be an option that also provides full backward compatibility. Once a line rate of 200G comes online, that same idea gets us to 1.6T.

Wait, what about my 8-fiber links?

While 16-fiber may be the most efficient configuration for speeds above 400G, there is still some value in the legacy 8-fiber deployments, mainly for those data centers running applications up to 400G. For those currently running 8-fiber trunks that need to be upgraded to 16-fiber, the question is: What’s the best way to handle that, and when?

Essentially, you need to double the number of fibers at the front of the panel to support the same port count within the panel. One way to do that is by switching the existing LC connectors on the front to the smaller SN connectors, provided that is an option with the fiber panel. SN packaging provides the footprint necessary to at least double the fiber count in the same space as a duplex LC adapter, while using the same size and proven ferrule. Two MPO8 (rear) to 8SN (front) connectors in a module fit in the same space as a single MPO8 to 4duplex LC connector module. This change frees up half of the panel space, allowing data centers to double the number of fibers available and support twice as many ports without adding rack space (which is generally not available for Day 2). Additional trunk cables can be added simply and easily with flexible cable managers. This pays significant dividends when it comes to managing Day 2 challenges.

Should I jump straight to 16-fiber trunks?

But what if you’re currently running 12- or 24-fiber trunks and are now ready to move to a more efficient 8- or 16-fiber setup to match the applications? The first consideration is your business model. Should you consider the 8-fiber option if your network team is evaluating applications that may require a 16-fiber link? Great question.

While 8-fiber breakouts sit alongside 12- or 24-fiber solutions for higher speed applications, when compared to a 16-fiber design, there’s a strong business case for forgetting 8-fiber trunk deployments. The key difference between the two? Port counts. Typical MPO port density is 72 per rack unit. If the trunk and applications are 8-fiber based, that’s 72 ports. If the applications become 16 fibers, that same 8-fiber base provides only 36 ports. 16 fiber trunks match the 16-fiber application with 72 per RU, but can also support 144 8-fiber ports in that same space.

The reality for many enterprise organizations is that 8-fiber applications with 4-way breakout ability will have a longer migration life based on capacity needs. However, energy efficiencies and lower cost per gigabit using 8-way breakouts available with 16-fiber ports may change the models sooner than originally planned. Moving from 8- to 16-fiber connections allows you to better distribute full switch capacity and, in some cases, eliminate some switches and their associated costs. In the case of a greenfield deployment, the smart money is on 16-fiber trunks, which efficiently support future path as well as legacy applications without wasting fiber.

Ideally, the 8-versus-16 decision is best made collaboratively by the infrastructure cabling team and the networking team. Often, however, the networking team makes the call, and the infrastructure team must find the best way to make the transition.

What about my legacy 12- or 24-fiber deployments?

While 8-fiber and 16-fiber configurations are best suited for the higher speeds that will take us to 1.6T and beyond, the reality inside many of today’s data centers—including large hyperscale facilities—is that many legacy 12-fiber trunks are still in use.

Let’s assume the decision is to go from 12 and 24 duplex fiber deployments to 8 and 16 fiber parallel applications. How do you make that transition without a complete rip-and-replace?

One way to do this is by using adapters and array cables—for example, using LCs on the front of a fiber patch panel and an array that terminates in four duplex LCs connected to an 8-fiber MPO. You also could break out a 24-fiber trunk into three 8-fiber arrays or two 24-fiber cables connected to three 16-fiber MPOs. One downside to the MPO adapter/array solution is cable management. Breakout lengths need to be practical to be useful. Additionally, MPO-based transceivers have alignment pins built in, requiring non-pinned equipment cords. Equipment cords that are non-pinned on both ends are the simplest for technicians in the field. But the right combination of pinned/non-pinned must appear throughout the channel.

The bottom line: There are a lot of ways to make the numbers work; the goal is always the same: Support the application requirements in the most efficient way possible without stranding fibers at the ports. Network managers should prioritize infrastructure solutions that can offer both the older 12- and 24-fiber designs as well as 8- and 16-fiber configurations without requiring time-consuming panel modifications in the field.

Slicing and dicing capacity within the panel

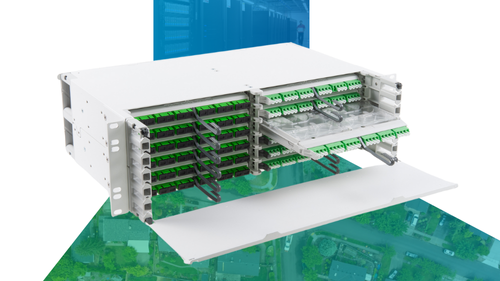

Another key requirement is for more design flexibility at the patch panel. In a traditional fiber platform design, components such as modules, cassettes and adapter packs are panel specific. As a result, swapping out components that have different configurations means changing the panel as well. The most obvious impact of this limitation is the extra time and cost to deploy both new components and new panels. At the same time, data center customers must also contend with additional product ordering and inventory costs.

In contrast, a design in which all panel components are essentially interchangeable and designed to fit in a single, common panel enables designers and installers to quickly reconfigure and deploy fiber capacity in the least possible time and with the lowest cost. So too, it enables data center customers to streamline their infrastructure inventory and its associated costs.

Infrastructure support for higher-speed migrations

So, to sum up: The more complex and crowded the data center environment grows, the more challenging the migration to higher speeds becomes. The degree of difficulty increases when the migration involves the (eventual) move to different fiber configurations. This is where data center managers find themselves. How they transition from their legacy 12- and 24-fiber deployments to more application-friendly 8- and (particularly) 16-fiber breakouts will determine their ability to leverage capacities of 800G and beyond for the benefit of their organizations. The same can be said regarding the amount of design flexibility they have at the patch panel.

These are the challenges that network managers at cloud and hyperscale facilities are now facing. On the positive side, CommScope has helped clear the way to a more seamless and efficient migration. To learn more about our Propel™ modular high-speed fiber infrastructure—with native support for 16-, 8-, 12- and 24-fiber based designs—check out the current Propel Design Guide.