Today, broadband networks don’t have a way of filtering traffic based on latency. And that creates a significant disadvantage for time-sensitive applications, like online gaming and day trading, which are growing in popularity. Fortunately, that’s about to change. Low-Latency DOCSIS (LLD), as proposed by CableLabs, specifies a logical separation between regular traffic and latency-sensitive traffic. By creating two separate traffic queues, MSOs have the opportunity to not only improve the user experience but offer a new service tier to generate additional revenue.

The importance of low latency

As far as network performance is concerned, speed and latency are very different metrics. While they’re related—and often conflated—they have unique requirements. High speeds enable the transfer of large amounts of data. A good example is streaming 4K video from YouTube or downloading a 25 GB software patch for a game. For this to happen quickly, high bandwidth speeds are imperative. However, there are times when speed alone isn’t enough to deliver the best quality experience. A clear example of this is the popular online multiplayer game Call of Duty. The real-time rendering of the dynamic game environment is extremely demanding, with typical data packets transmitted at a bitrate of 100 kbps to 200 kbps in both the upstream and the downstream. But that’s only half the story.

Because Call of Duty is online, interactive, and involves multiple players competing and collaborating over a common server, latency has an arguably greater impact on gameplay than speed. In this fast-paced environment, millisecond connection delays are the difference between success and failure. As such, low latency is a well-recognized advantage in online multiplayer games. With lower latency—that is, the time that packets spend reaching the Call of Duty gaming server and returning a response to the multiplayer gamer—players can literally see and do things in the game before others can. More importantly, it’s an advantage that many gamers are willing pay for. If we apply this same logic and competitive edge to finance and day trading, it’s obvious why professionals and amateurs alike would be willing to pay for it too.

What causes latency?

In order to minimize latency, it’s helpful to understand its underlying conditions. First, there are multiple sources of latency in DOCSIS networks, including protocol- and application-dependent queuing delays, propagation delay, request-grant delay, channel configuration, as well as switching and forwarding delays.

Typically, all network traffic merges into a single DOCSIS service flow. This traffic includes both streams that build queues—like video streaming apps—and streams that don’t build queues—like multiplayer gaming apps. The challenge that this single-flow architecture presents is a lack of distinction between the two types of traffic. Both the gaming app and the video streaming app are treated the same on the network, but their needs are very different: A traffic jam might not matter for the purpose of watching a YouTube video, which can buffer and play asynchronously, but for competing in a multiplayer game of Call of Duty, being stuck in the streaming video queue is a death sentence. The indiscriminate treatment of traffic on today’s DOCSIS networks adds latency and jitter precisely where it’s unwanted.

The advantage of Low-Latency DOCSIS

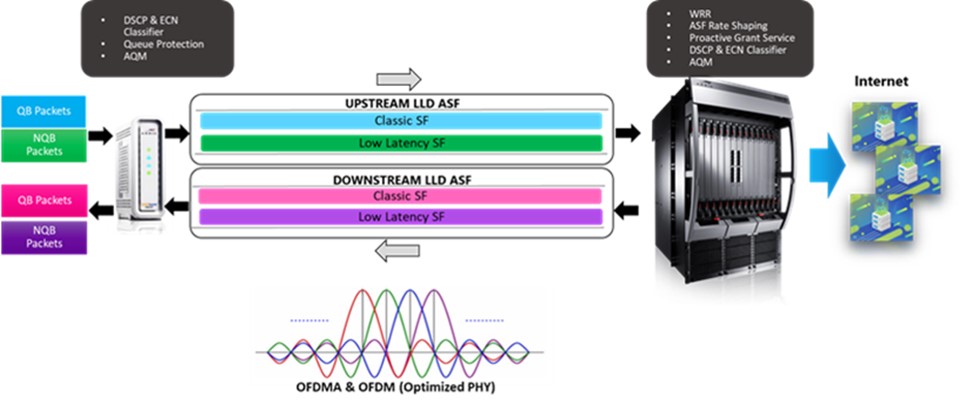

The LLD architecture uses a construct called Aggregate Service Flow (ASF) to assign its two underlying service flows to queue-building traffic (known as “classic service flow”) and non-queue-building traffic (known as “low-latency service flow”). This arrangement solves precisely the queueing problem identified above and ensures that queue-building application data in the DOCSIS access network doesn’t cause delays for time-sensitive non-queue-building data. In other words, customers’ videos won’t get in the way of their gaming.

Specifically, the LLD architecture offers several new key features:

ASF service flow encapsulation – The LLD ASF manages the traffic shaping of both service flows by enforcing an Aggregate Maximum Sustained Rate (AMSR), in which the AMSR is the combined total of the low-latency and classic service flow bit rates.

Weighted Round Robin Scheduler – The granting mechanism in LLD is governed by a Weighted Round Robin inter-service flow scheduler running on the CMTS, which conditionally prioritizes the low-latency flow to various configurable settings.

Proactive Grant Service scheduling type – LLD introduces a revolutionary new data scheduling type known as the Proactive Grant Service, which enables a faster request grant cycle by eliminating the need for a bandwidth request.

Service flow traffic classification – This packet classification plays a crucial role in placing a given packet into either the classic or low-latency service flow.

Active Queue Management algorithms – The new classic service flow uses the existing DOCSIS PIE algorithm and drops packets as queues build to maintain a target latency. Meanwhile, the low-latency service flow implements a new AQM algorithm known as Immediate AQM, that does not drop packets but marks the ECN field in the IP header with Congestion Experienced (CE) bits to ensure a shallow queue. The two AQM algorithms have coupled probabilities to ensure bandwidth capacity is shared by both of the service flows in the Aggregate Service Flow.

Queue protection – This feature categorizes packets into the application data flows called Microflows and ensures that each microflows are directed to the appropriate service flow depending on network traffic type (QB or NQB) and critical latency threshold.

Latency histograms – A new histogram feature will offer greater visibility into the service flow buffers and queue build-ups.

In short, LLD has the ability to add some exciting new features to traditional DOCSIS networks. In order to understand exactly how they function and capture a glimpse of the performance gains, we set up several experiments using LLD in a DOCSIS 3.1 network. The results of these tests can be found in our technical paper.

We also invite you to reach out to us for more information on LLD and how it can work in your network. CommScope has a variety of resources available to help you capture the benefits of this specification in improving the user experience and creating new revenue opportunities.

Note to Readers: The original article was first posted in February 2021 in the publication Fast Mode.